In the Part 1 of AlloyDB Blog Series, I discussed the Introduction of AlloyDB for PostgresSQL. Then in Part 2 of the blog series, I discussed how to do the installation of AlloyDB Omni in a GCE VM. In Part 3 of the blog series, I discussed how to connect to AlloyDB Omni using psql and pgAdmin. In Part 4 of the blog series, I discussed how to load TPCH data into the AlloyDB Omni instance and check out AlloyDB Omni’s columnar engine. In Part 5 of the blog series, I will discuss how to create a new VM with the same vCPU and memory setting as the VM used for AlloyDB Omni in the previous blogs, install PostgreSQL database server, load TPCH data and see the query performance there. Time for a duel, AlloyDB Omni vs PostgreSQL, which one is better?

Create VM for PostgreSQL Database Server

Create a GCP VM with the exact configuration used in Part 2 installation of AlloyDB Omni in a GCE VM.

export VM_NAME=pgdb

export VM_DISK_SIZE=50

export VM_MACHINE_TYPE=n2-highmem-4

export VM_OS_IMAGE=projects/centos-cloud/global/images/centos-stream-9-v20230711

export GCP_PROJECT=<put your project id here>

export GCP_ZONE=us-west4-b

export GCP_SUBNET=<put your subnet here>

export GCP_SERVICE_ACCOUNT=<put your GCP Service account here>

gcloud compute instances create $VM_NAME \

--project=$GCP_PROJECT \

--zone=$GCP_ZONE \

--machine-type=$VM_MACHINE_TYPE \

--network-interface=network-tier=PREMIUM,subnet=$GCP_SUBNET,no-address \

--metadata=enable-os-login=true \

--no-address \

--maintenance-policy=MIGRATE \

--provisioning-model=STANDARD \

--service-account=$GCP_SERVICE_ACCOUNT \

--scopes=https://www.googleapis.com/auth/devstorage.read_write,https://www.googleapis.com/auth/logging.write,https://www.googleapis.com/auth/monitoring.write,https://www.googleapis.com/auth/servicecontrol,https://www.googleapis.com/auth/service.management.readonly,https://www.googleapis.com/auth/trace.append \

--create-disk=auto-delete=yes,boot=yes,device-name=instance-1,image=$VM_OS_IMAGE,mode=rw,size=$VM_DISK_SIZE,type=projects/alloydb-omni/zones/us-central1-a/diskTypes/pd-ssdConfigure VM and Install PostgreSQL v15 Database

Create a new user, pguser.

gcloud compute ssh --zone $GCP_ZONE $VM_NAME --tunnel-through-iap --project $GCP_PROJECT

sudo yum install -y yum-utils wget unzip

export PGDB_USER=pguser

sudo useradd -g adm -G google-sudoers ${PGDB_USER}

groups

id ${PGDB_USER}

sudo timedatectl set-timezone America/Chicago

ls -l /etc/localtime

sudo su ${PGDB_USER}

cd

pwd Install and configure PostgreSQL database

sudo su -

dnf module -y install postgresql:15

postgresql-setup --initdb

systemctl enable --now postgresqlHere is the result output

[pguser@pgdb weidong.zhou]$ sudo su -

[root@pgdb ~]# dnf module -y install postgresql:15

Last metadata expiration check: 0:01:24 ago on Mon 21 Aug 2023 06:45:28 PM CDT.

Dependencies resolved.

=======================================================================================================================================

Package Architecture Version Repository Size

=======================================================================================================================================

Installing group/module packages:

postgresql-server x86_64 15.2-1.module_el9+264+92dde3f0 appstream 6.1 M

Installing dependencies:

postgresql x86_64 15.2-1.module_el9+264+92dde3f0 appstream 1.7 M

postgresql-private-libs x86_64 15.2-1.module_el9+264+92dde3f0 appstream 138 k

Installing module profiles:

postgresql/server

Enabling module streams:

postgresql 15

Transaction Summary

=======================================================================================================================================

Install 3 Packages

Total download size: 8.0 M

Installed size: 31 M

Downloading Packages:

(1/3): postgresql-private-libs-15.2-1.module_el9+264+92dde3f0.x86_64.rpm 333 kB/s | 138 kB 00:00

(2/3): postgresql-15.2-1.module_el9+264+92dde3f0.x86_64.rpm 2.5 MB/s | 1.7 MB 00:00

(3/3): postgresql-server-15.2-1.module_el9+264+92dde3f0.x86_64.rpm 6.0 MB/s | 6.1 MB 00:01

---------------------------------------------------------------------------------------------------------------------------------------

Total 5.9 MB/s | 8.0 MB 00:01

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Installing : postgresql-private-libs-15.2-1.module_el9+264+92dde3f0.x86_64 1/3

Installing : postgresql-15.2-1.module_el9+264+92dde3f0.x86_64 2/3

Running scriptlet: postgresql-server-15.2-1.module_el9+264+92dde3f0.x86_64 3/3

Installing : postgresql-server-15.2-1.module_el9+264+92dde3f0.x86_64 3/3

Running scriptlet: postgresql-server-15.2-1.module_el9+264+92dde3f0.x86_64 3/3

Verifying : postgresql-15.2-1.module_el9+264+92dde3f0.x86_64 1/3

Verifying : postgresql-private-libs-15.2-1.module_el9+264+92dde3f0.x86_64 2/3

Verifying : postgresql-server-15.2-1.module_el9+264+92dde3f0.x86_64 3/3

Installed:

postgresql-15.2-1.module_el9+264+92dde3f0.x86_64 postgresql-private-libs-15.2-1.module_el9+264+92dde3f0.x86_64

postgresql-server-15.2-1.module_el9+264+92dde3f0.x86_64

Complete!

[root@pgdb ~]# postgresql-setup --initdb

* Initializing database in '/var/lib/pgsql/data'

* Initialized, logs are in /var/lib/pgsql/initdb_postgresql.log

[root@pgdb ~]# systemctl enable --now postgresql

Created symlink /etc/systemd/system/multi-user.target.wants/postgresql.service → /usr/lib/systemd/system/postgresql.service.Create Database/User and Copy Data/Scripts

Run the following to create database and user, then change the password for both postgres and pguser.

su - postgres

export PGDB_USER=pguser

createuser ${PGDB_USER}

createdb tpch_db -O ${PGDB_USER}

psql -c "select usename from pg_user;"

psql -l

psql

show server_version;

\conninfo

alter user postgres with password 'postgres123';

alter user pguser with password 'pguser123';

\q

exit Here is the result looks like. Note: the database is also the same version of v15.2 as my AlloyDB Omni database in the last blog, Load TPCH Data into AlloyDB Omni.

[root@pgdb data]# su - postgres

[postgres@pgdb ~]$ export PGDB_USER=pguser

[postgres@pgdb ~]$ createuser ${PGDB_USER}

[postgres@pgdb ~]$ createdb tpch_db -O ${PGDB_USER}

[postgres@pgdb ~]$ psql -c "select usename from pg_user;"

usename

----------

postgres

pguser

(2 rows)

[postgres@pgdb ~]$ psql -l

List of databases

Name | Owner | Encoding | Collate | Ctype | ICU Locale | Locale Provider | Access privileges

-----------+----------+----------+-------------+-------------+------------+-----------------+-----------------------

postgres | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | | libc |

template0 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | | libc | =c/postgres +

| | | | | | | postgres=CTc/postgres

template1 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | | libc | =c/postgres +

| | | | | | | postgres=CTc/postgres

tpch_db | pguser | UTF8 | en_US.UTF-8 | en_US.UTF-8 | | libc |

(4 rows)

[postgres@pgdb ~]$ psql

psql (15.2)

Type "help" for help.

postgres=# show server_version;

server_version

----------------

15.2

(1 row)

postgres=# \conninfo

You are connected to database "postgres" as user "postgres" via socket in "/var/run/postgresql" at port "5432".

postgres=# alter user postgres with password 'postgres123';

ALTER ROLE

postgres=# alter user pguser with password 'pguser123';

ALTER ROLE

postgres=# \q

[postgres@pgdb ~]$ exit

logout

[root@pgdb data]# Copy TPCH data and related SQL scripts

I have already copied the TPCH data and related SQL scripts to a GCS bucket. So right now just need to copy the data and script to the right folder

su - pguser

cd

mkdir tpch

gsutil cp -r gs://<your GCS bucket>/database/tpch .

ls -l tpch/tpch1g

ls -l tpch/ddl

sed -i 's/alloyuser/pguser/' tpch/ddl/tpch_load_1g_data.sql

cat tpch/ddl/tpch_load_1g_data.sql

Create Database/Schema/Tables and Load TPCH Data

Run the following to create database/schema/tables and then load TPCH data. Make sure you are still in the session of pguser OS user.

psql -d tpch_db

\conninfo

create schema tpch1g;

SET search_path TO tpch1g, public;

##### Copy the DDL and datafile

\set cur_schema_name 'tpch1g'

\set cur_user 'pguser'

\set root_path '/home/pguser/tpch'

select :'root_path' "root_path";

select :'cur_schema_name' "cur_schema_name";

select :'cur_user' "cur_user";

GRANT SELECT, INSERT, UPDATE, DELETE, TRUNCATE, REFERENCES, TRIGGER ON ALL TABLES IN SCHEMA :"cur_schema_name" TO :"cur_user";

ALTER DEFAULT PRIVILEGES IN SCHEMA :"cur_schema_name" GRANT SELECT, INSERT, UPDATE, DELETE, TRUNCATE, REFERENCES, TRIGGER ON TABLES TO :"cur_user";

\i :root_path/ddl/tpch_create_table.sql

\i :root_path/ddl/tpch_create_index.sql

\i :root_path/ddl/tpch_load_1g_data.sql

\qCompare TPCH Query Performance Between AlloyDB Omni and Postgres

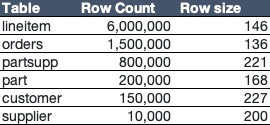

Ok, right now. I have all of the data ready. Let’s do the comparison. The first query is the Query 6 I used in the last blog, Load TPCH Data into AlloyDB Omni. The performance is about 280 ms without enable_columnar_scan parameter setting to off and 27ms after the parameter was set to on and execute google_columnar_engine_recommend. Let’s how Postgres performs. The result is shown below.

tpch_db=> \timing on

Timing is on.

tpch_db=> SET search_path TO tpch1g, public;

SET

Time: 0.210 ms

tpch_db=> select

sum(l_extendedprice * l_discount) as revenue

from

lineitem

where

l_shipdate >= date '1995-01-01'

and l_shipdate < date '1995-01-01' + interval '1' year

and l_discount between 0.09 - 0.01 and 0.09 + 0.01

and l_quantity < 24

limit 1;

revenue

----------------

185724059.4734

(1 row)

Time: 622.051 ms

tpch_db=> select

sum(l_extendedprice * l_discount) as revenue

from

lineitem

where

l_shipdate >= date '1995-01-01'

and l_shipdate < date '1995-01-01' + interval '1' year

and l_discount between 0.09 - 0.01 and 0.09 + 0.01

and l_quantity < 24

limit 1;

revenue

----------------

185724059.4734

(1 row)

Time: 579.368 ms

tpch_db=> select

sum(l_extendedprice * l_discount) as revenue

from

lineitem

where

l_shipdate >= date '1995-01-01'

and l_shipdate < date '1995-01-01' + interval '1' year

and l_discount between 0.09 - 0.01 and 0.09 + 0.01

and l_quantity < 24

limit 1;

revenue

----------------

185724059.4734

(1 row)

Time: 591.576 msI execute the query three times and get result ranging from 579 to 622 ms. So for AlloyDB Omni, the performance is about 2 times faster without columnar engine or 20 times faster with columnar engine. This is great performance.

Let me try another query, Query 2 : Minimum Cost Supplier Query. This query finds which supplier should be selected to place an order for a given part in a given region. The query is shown below:

select

s_acctbal,

s_name,

n_name,

p_partkey,

p_mfgr,

s_address,

s_phone,

s_comment

from

part,

supplier,

partsupp,

nation,

region

where

p_partkey = ps_partkey

and s_suppkey = ps_suppkey

and p_size = 25

and p_type like '%STEEL'

and s_nationkey = n_nationkey

and n_regionkey = r_regionkey

and r_name = 'EUROPE'

and ps_supplycost = (

select

min(ps_supplycost)

from

partsupp,

supplier,

nation,

region

where

p_partkey = ps_partkey

and s_suppkey = ps_suppkey

and s_nationkey = n_nationkey

and n_regionkey = r_regionkey

and r_name = 'EUROPE'

)

order by

s_acctbal desc,

n_name,

s_name,

p_partkey

LIMIT 100;Here is the result looks like

| Database Type | Columnar Engine | Execution SeqNo | Execution Time |

|---|---|---|---|

| AlloyDB Omni (v15.2) | Off | 1 | 300.949 ms |

| Off | 2 | 296.547 ms | |

| On | 1 | 207.195 ms | |

| On | 2 | 170.969 ms | |

| PostgreSQL (v15.2) | N/A | 1 | 430.005 ms |

| N/A | 2 | 394.203 ms |

So the result above shows AlloyDB Omni is slightly better, about 30% faster, without columnar engine, and more than 2 times faster with columnar engine on.

So AlloyDB Omni is indeed faster from my test cases than PostgreSQL. Please note, AlloyDB Omni is a standalone version of AlloyDB that can be run anywhere. The AlloyDB cluster running on the GCP infrastructure will be much faster compared with the single instance AlloyDB Omni. AlloyDB Omni is definitely a good way to test out AlloyDB columnar store anywhere you want. As many people are concerning the rising cost of cloud based resource, AlloyDB Omni is a good and cheaper way to use for DEV and TEST environment while AlloyDB cluster can be used in Pre-Prod or Production environment.

In the next blog, I will discuss how to create AlloyDB cluster and access it from laptop.

You must be logged in to post a comment.