Previously, I discussed the followings:

In the last few posts, I discussed the following:

1. Install Cloudera Hadoop Cluster using Cloudera Manager

2. Configurations after CDH Installation

3. Load Data to Hive Table.

4. Import Data to Hive from Oracle Database

5. Export data from Hive table to Oracle Database.

Although Hive is popular in Hadoop world, it has its own drawback, like excessive Map/Reduce operations for certain queries and JVM overhead during Map/Reduce. Impala is designed to improve the query performance accessing data on HDFS. Hive is SQL on Hadoop while Impala is the SQL on HDFS. Hive is using MapReduce job to get the query result while Impala is using the its daemons running on the data nodes to directly access the files on HDFS and don’t use Map/Reduce at all.

There are two ways to use Impala to query tables in Hive. One way is to use command line, Impala Shell. Another one is to use Hue GUI. I am going to show both methods one by one.

Use Impala Shell

Impala Shell is a nice tool similar to SQL Plus to setup database and tables and issue queries. The speed of ad hoc queries is much faster than Hive’s query, especially for queries requiring fast response time. Here are the steps in using Impala shell.

1. Logon as wzhou user and start the impala shell.

[wzhou@vmhost1 ~]$ impala-shell

Starting Impala Shell without Kerberos authentication

Error connecting: TTransportException, Could not connect to vmhost1.local:21000

Welcome to the Impala shell. Press TAB twice to see a list of available commands.

Copyright (c) 2012 Cloudera, Inc. All rights reserved.

(Shell build version: Impala Shell v2.2.0-cdh5.4.3 (517bb0f) built on Wed Jun 24 19:17:40 PDT 2015)

[Not connected] >

Note: The prompt shows Not connected. I need to connect the Impala shell to any Data Node with impalad daemon. My cluster is using vmhost2 and vmhost3 as Data Node. So I pick any one of them, use vmhost2 for this test.

[Not connected] > connect vmhost2;

Connected to vmhost2:21000

Server version: impalad version 2.2.0-cdh5.4.3 RELEASE (build 517bb0f71cd604a00369254ac6d88394df83e0f6)

[vmhost2:21000] >

2. Run some queries. Impala can see the same list of databases and tables like Hive does.

[vmhost2:21000] > show databases; Query: show databases +------------------+ | name | +------------------+ | _impala_builtins | | default | | test1 | | test_oracle | +------------------+ Fetched 4 row(s) in 0.01s [vmhost2:21000] > use test_oracle; Query: use test_oracle [vmhost2:21000] > show tables; Query: show tables +----------------------+ | name | +----------------------+ | my_all_objects | | my_all_objects_sqoop | +----------------------+ Fetched 2 row(s) in 0.01s [vmhost2:21000] > select * from my_all_objects_sqoop limit 3; Query: select * from my_all_objects_sqoop limit 3 +-------+-------------+-----------+-------------+-------------+ | owner | object_name | object_id | object_type | create_date | +-------+-------------+-----------+-------------+-------------+ | SYS | I_USER1 | 46 | INDEX | 2013-03-12 | | SYS | I_OBJ# | 3 | INDEX | 2013-03-12 | | SYS | I_IND1 | 41 | INDEX | 2013-03-12 | +-------+-------------+-----------+-------------+-------------+ Fetched 3 row(s) in 0.04s [vmhost2:21000] > select count(*) from my_all_objects_sqoop; Query: select count(*) from my_all_objects_sqoop +----------+ | count(*) | +----------+ | 22519 | +----------+ Fetched 1 row(s) in 1.00s

Use Hue Web UI

Another way to use Impala is to run query from Hue UI.

1. From Cloudera Manager screen, click Hue. After Hue screen shows up, click Hue Web UI.

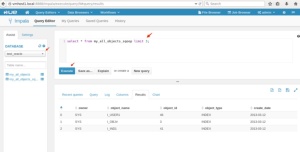

2. On Hue home screen, click Query Editors, then choose Impala.

3. After Impala Query Editor screen shows up, select test_oracle under DATABASE. Input the following query, then click Execute.

select * from my_all_objects_sqoop limit 3;

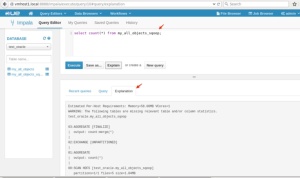

4. Run another query and check out Explain plan.

Run Time Comparison between Hive and Impala

Hive

hive> use test_oracle;

OK

Time taken: 0.534 seconds

hive> show tables;

OK

my_all_objects

my_all_objects_sqoop

Time taken: 0.192 seconds, Fetched: 2 row(s)

hive> select count(*) from my_all_objects;

Query ID = wzhou_20150922112626_efde0c06-2f04-44b2-9bf1-47b31e45de03

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapreduce.job.reduces=

Starting Job = job_1442935988315_0002, Tracking URL = http://vmhost1.local:8088/proxy/application_1442935988315_0002/

Kill Command = /usr/lib/hadoop/bin/hadoop job -kill job_1442935988315_0002

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2015-09-22 11:26:33,759 Stage-1 map = 0%, reduce = 0%

2015-09-22 11:26:46,563 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.25 sec

2015-09-22 11:26:58,035 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.97 sec

MapReduce Total cumulative CPU time: 3 seconds 970 msec

Ended Job = job_1442935988315_0002

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 3.97 sec HDFS Read: 2057365 HDFS Write: 6 SUCCESS

Total MapReduce CPU Time Spent: 3 seconds 970 msec

OK

22782

Time taken: 40.881 seconds, Fetched: 1 row(s)

Impala

Wow, 41 seconds to get a row count of 22,782 by using Hive. That seem excessive on a cluster no other jobs running. Ok, let’s look at Impala’s result.

Note: Impala does not poll frequently for metadata changes. So in case you don’t see the table name after the import, just do the following:

invalidate metadata;

show tables;

[wzhou@vmhost1 ~]$ impala-shell

[Not connected] > connect vmhost2;

Connected to vmhost2:21000

Server version: impalad version 2.2.0-cdh5.4.3 RELEASE (build 517bb0f71cd604a00369254ac6d88394df83e0f6)

[vmhost2:21000] > use test_oracle;

Query: use test_oracle

[vmhost2:21000] > show tables;

Query: show tables

+———————-+

| name |

+———————-+

| my_all_objects |

| my_all_objects_sqoop |

+———————-+

Fetched 2 row(s) in 0.01s

[vmhost2:21000] > select count(*) from my_all_objects;

Query: select count(*) from my_all_objects

+———-+

| count(*) |

+———-+

| 22782 |

+———-+

Fetched 1 row(s) in 0.12s

The above result shows Hive took 41 seconds to get the row count of a table with 22, 782 rows while Impala was significant faster and took 0.12 seconds. I know my cluster is small, not powerful and hive is using Map/Reduce. But getting a total row count of 22,000 needs 45 seconds, it seems too much. On the other hand, Impala’s timing looks more reasonable to me. Obviously Map/Reduce is not my option if I want to run some queries that expect fast response time. But if executing a long running job against a huge dataset, Map/Reduce option might still be on the table if considering job fault tolerance.

You must be logged in to post a comment.