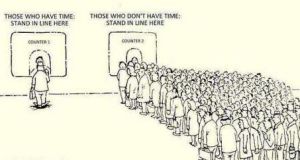

My last post discusses the steps to move stats to Exadata environment. During the same cutover window, we actually did another task: move certain SQL Profiles from UAT environment to PROD environment. Just like photo below, let’s do the moving in one run.

For SQL Profile, I usually use it as the last approach to resolve production performance issue. I always recommend my clients to change application code to tune the poor performed queries first. Only at the time no code change is allowed and production database is suffering from bad queries significantly, SQL Profiles will be used. For a large legacy database suffering from poor performance in the past, it’s not surprise to see many SQL Profiles in the legacy database. If there are only 10 or 20 SQL Profiles, it can be manageable. But there is no way to maintain hundreds, even thousands SQL Profiles in the database, especially during migration from 11.2.0.3 Non-Exadata to 11.2.0.4 Exadata environment. So after we restore the legacy database into an UAT environment on Exadata, we disabled all SQL Profiles in the database. During the testing, if query performance was not good in Exadata environment, we implemented new SQL Profile or reused the old one if existing one performed better. By using strategy, we cut down the SQL Profiles from 256 before migration to 9 during the migration. During the cutover window, we only need to move these 9 SQL Profiles from UAT to PROD environment.

The followings are the sample steps for moving SQL Profiles. It uses the same db_my_stats schema created in the previous post. Here is the summary of the steps.

1. Copy the enabled SQL Profile to a staging table under the same db_my_stats schema created in the last post.

2. Export the staging table.

3. Copy the export data pump files to PROD database on Exadata.

4. Disable all SQL Profile in PROD database.

5. Import the enabled SQL Profiles in PROD database.

Step 2 and 3 are discussed in my last post and I am not going to repeat them here. Only step 4 and 5 are performed during cutover window. Here are the sample scripts to illustrate the steps:

Copy the enabled SQL Profile to a staging table

Generate necessary sql scripts.

vi gen_exp_imp_sqlprofile.sql

set echo off set feedback off set lines 150 set pages 0 prompt ---------------- Generate SQL Profile Export and Import ----------------- prompt prompt -- generate SQL to export SQL profile prompt spool EXPORT_TO_SQLPROFILE_TABLE.SQL select 'spool EXPORT_TO_SQLPROFILE_TABLE.log' from dual; select 'EXEC DBMS_SQLTUNE.PACK_STGTAB_SQLPROF ( ' || 'staging_table_name => ''PROFILE_STG'', profile_name=>''' || name || '''); ' content from dba_sql_profiles where status = 'ENABLED' order by name; select 'spool off' from dual; prompt prompt -- generate SQL to get total rows in the SQL Profile table prompt spool GET_EXPORT_SQL_PROFILE_ROW_COUNT.SQL select 'spool GET_EXPORT_SQL_PROFILE_ROW_COUNT.log' from dual; select 'prompt SQL profiles qualifed for export ' from dual; select 'SELECT name, status FROM dba_sql_profiles where status = ''ENABLED'' order by name;' from dual; select 'prompt SQL profiles exported ' from dual; select 'SELECT count(*) FROM DB_MY_STATS.PROFILE_STG;' from dual; select 'select obj_name, obj_type, sql_handle status from dbmir.PROFILE_STG order by object_name;' from dual; select 'spool off' from dual; prompt prompt -- generate SQL to import SQL profile prompt spool IMPORT_TO_SQLPROFILE_TABLE.SQL select 'spool IMPORT_TO_SQLPROFILE_TABLE.log' from dual; select 'EXEC DBMS_SQLTUNE.UNPACK_STGTAB_SQLPROF(REPLACE => TRUE, ' || 'staging_table_name => ''PROFILE_STG'', profile_name=>''' || name || '''); ' content from dba_sql_profiles where status = 'ENABLED' order by name; select 'spool off' from dual; spool off

Run the gen script.

@gen_exp_imp_sqlprofile

Export SQL Profile

sqlplus / as sysdba

connect db_my_stats/db_my_stats

Create SQL profile staging table

EXEC DBMS_SQLTUNE.CREATE_STGTAB_SQLPROF (table_name => ‘PROFILE_STG’, schema_name=>’DB_MY_STATS’);

Run the script to export SQL Profile to the staging table and get the row count for the exported profiles.

@EXPORT_TO_SQLPROFILE_TABLE.SQL

@GET_EXPORT_SQL_PROFILE_ROW_COUNT.SQL

At this moment, the export is done. Then follows the export and import step from last post to populate the staging table PROFILE_STG in the target database.

Disable all SQL Profiles

vi disable_all_sqlprofile.sql

BEGIN

for r in

( select name, category, status, sql_text, force_matching

from dba_sql_profiles

order by category, name)

loop

DBMS_SQLTUNE.ALTER_SQL_PROFILE (name => r.name, attribute_name => 'STATUS', value => 'DISABLED');

end loop;

END;

/

Run the script.

@disable_all_sqlprofile.sql

Import SQL Profile

@IMPORT_TO_SQLPROFILE_TABLE.SQL

Verify the the enabled SQL Profile

select name, category, type, status from dba_sql_profiles

where status = ‘ENABLED’;

Ok, we are done with the moving of SQL Profile here.

You must be logged in to post a comment.