Recently we were working on another 130+TB database with 5200+ datafiles. After the database was upgraded from 11.2.0.3 to 11.2.0.4 on a full rack X4 Exadata, I did some verifications and noticed my query (using dba_free_space and dba_data_files) to find out tablespace usage took forever and never completed. I have run the same query on other 100+ TB databases and it could take some time, but never run into the situation that it has never completed.

I pulled out the execution plan for the query and found out the majority of was on fixed table X$KTFBUE. The plan was doing full table scan. At the time I killed the query, the actual row processed was 10 million rows while the estimate total number of rows was 100,000 rows. By the way, 100,000 rows is the default value for X$KTFBUE when no stats is available. Obviously it is related to STATS issue. Then I run the query below to find out whether X$KTFBUE has STATS or not.

select * from dba_tab_statistics where table_name='X$KTFBUE';

It indeed did not have stats. Maybe we forgot to collect the fixed object stats. Run the queries below and find out we had stats in the majority of fixed tables.

SELECT * FROM DBA_TAB_STATISTICS WHERE OBJECT_TYPE='FIXED TABLE'; SELECT * FROM DBA_TAB_STATISTICS WHERE OBJECT_TYPE='FIXED TABLE' and last_analyzed is null;

Maybe we had issue during gathering fixed objects. So I rerun the stats collection shown below for fixed objects.

SQL> exec DBMS_STATS.GATHER_FIXED_OBJECTS_STATS; PL/SQL procedure successfully completed. Elapsed: 00:05:19.42

It completed successfully in 5 minutes. Checked out X$KTFBUE, still no STATS. This became interesting to me. With a fixed table having at least 10+ million rows and seem to be one of important fixed table to get tablespace sizing information, why is it excluded from fixed object stats collection?

With this question in mind, I did some research. There is a document discussing why STATS is missing for certain fixed tables: Why do some Fixed Tables not have Optimizer Statistics (Doc ID 1355608.1). Here is the answer in the document.

Why do some fixed tables not have statistics even after running: DBMS_STATS.GATHER_FIXED_OBJECTS_STATS?

Some fixed tables are left without stats because the development team has flagged the code to avoid statistics gathering for some fixed tables. This is because they know they will perform better without stats or because it is not supported to gather the statistics upon them.

This was confirmed in : Bug 12844116 fixed tables statistics

The document applies to version 10.2.0.4 to 11.2.0.2. Obviously it is covered version 11.2.0.4 in my case.

Another document also discussed the similar behavior: Query Against DBA_FREE_SPACE is Slow After Applying 11.2.0.4 (Doc ID 1904677.1). The document mentions two major symptoms that simialr to my issue:

1. Slow query from dba_free_space after applying 11.2.0.4

2. The query is doing a Full Tabble Scan (FTS) against X$KTFBUE.

Some fixed tables(X$) have no CBO statistics against them and use defaults; it may be too expensive to gather stats or the tables are too volatile such that any statistics are immediately outdated.

The sys.x$ktfbue table is one such object. These statistical inaccuracies can cause suboptimal plans in some cases.

The document mentioned gathering stats on x$ktfbue table. So I went ahead collecting stats as follows:

EXEC DBMS_STATS.gather_table_stats('SYS', 'X$KTFBUE');

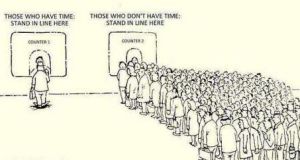

As it took only 5 minutes to collect fixed object stats, I thought a couple of minutes or maxmium 10 minutes should complete the stats gathering for X$KTFBUE table. I was so wrong, one hour, two hours passed and stats job was still going. Maybe the gather_table_stats was stuck somewhere. Check out OEM SQL Monitor, I can see the number of rows processed still going up slowly. I was just like the above squirrel patiently waiting for the finish. Finally, after 7.5 hours it completed successfully. The table has about 99 million rows, about 1000 times more than default value of 100,000 rows. Now I understand why this fixed table is excluded from GATHER_FIXED_OBJECTS_STATS. It is just too expensive to collect stats. Majority of time it should not cause an issue. This is the first Exadata database I notices having fixed object issue after running GATHER_FIXED_OBJECTS_STATS.

During the time I waited for the stats gathering completion, I found another Oracle Document: Query against DBA_EXTENTS slow after upgrade to 11.2.0.3 (Doc ID 1453425.1). This applies to database version 11.2.0.3 and later. Here are some part of the note:

SYMPTOMS

Queries on DBA_EXTENTS are much slower due to the presence of a merge join cartesian.

This can also influence the performance of script used to collect upgrade/migrate diagnostics as per instructions in:

Document 556610.1 Script to Collect DB Upgrade/Migrate Diagnostic Information (dbupgdiag.sql).

Running script on version 11.2.0.1 prior to upgrade, there was no performance issue.

The following SQL query from dbupgdiag.sql may run slowly (~2 hours) after applying patchset 11.2.0.3.

Prompt ===========================================

Prompt Tablespace and the owner of the aud$ table

Prompt ===========================================

select owner,tablespace_name from dba_extents where segment_name=’AUD$’ group by

owner,tablespace_name;CHANGES

An execution plan regression occurs if there are a large number of records in the X$KTFBUE table. Gathering of dictionary or fixed object stats does not improve performance as this table is intentionally omitted from those packages.CAUSE

The Cost Based Optimizer uses a cardinality estimate of 100,000 rows for this table, and the 11.2.0.3 execution plan is doing a full table scan. If you have a large number of extents, this query can take more than 1 hour to complete. There are a number of unpublished bugs open on slow perfomance for queries on DBA_EXTENTS.

For example, following bugs:

Bug:13542477 ON 11.2.0.3 QUERY DBA_EXTENTS IS SO SLOW

Bug:14221159 JOIN CARDINALITY INCORRECT CALCULATED AFTER FIX 11814428SOLUTION

Following are possible workarounds to improve the performance

Gathering stats on the X$KTFBUE table using the following command:

EXEC DBMS_STATS.GATHER_TABLE_STATS(‘SYS’, ‘X$KTFBUE’);

OR

alter session set “_optimizer_cartesian_enabled” = false;

OR

alter session set “_smm_auto_cost_enabled” = false;

OR

Disable fix for unpublished Bug Bug 11814428: POOR CARDINALITY ESTIMATE FROM JOIN WITH A UNIONALL VIEW:

alter session set “_FIX_CONTROL” = “11814428:0″;

This document reminded me the similar issue we run into last year when we built the same database for POC purpose. At the time when we tried to upgrade the POC database from 11.2.0.3 to 11.2.0.4. The dbupgdiag.sql script run forever and never finished. Opened SR with Oracle and Oracle Support recommended to comment out the following line in dbupgdiag.sql script as a workaround solution.

select owner,tablespace_name from dba_extents where segment_name=’AUD$’ group by owner,tablespace_name;

Finally after the stats collection completed, run the same query again, the result comes back within 15 seconds. Checked out the execution plan, I can see it does not use full table scan of the X$KTFBUE table, but use an index from this table. Issue solved and life is good.

Additional note:

1. So what exactly X$KTFBUE table is used for? X$KTFBUE is a dynamically generated map of used extents in locally managed tablespace.

The followings are the definitions for the columns in x$ktfbue.

ADDR : N/A

INDX : N/A

INST_ID : N/A

KTFBUESEGTSN : ts# containing this segment

KTFBUESEGFNO : Relative number of the file containing the segment header

KTFBUESEGBNO : segment header block number

KTFBUEEXTNO : Extent number

KTFBUEFNO : Relative number of the file containing the extent

KTFBUEBNO : Starting block number of the extent

KTFBUEBLKS : Size of the extent in ORACLE blocks

KTFBUECTM : commit_jtime,Commit Time of the undo in the extent expressed as Julian date

KTFBUESTT :commit_wtime,Commit Time of the undo in the extent expressed as wall clock time

KTFBUESTA : Transaction Status of the undo in the extent;1, ‘ACTIVE’, 2, ‘EXPIRED’, 3, ‘UNEXPIRED’, 0 for non‐undo

2. If looked at the execution plan, even we fixed the stats issue for X$KTFBUE table, we might still run into issue related to recyclebin. The plan shows nested loop involving recyclebin. Here is the DBA_FREE_SPACE looks like.

SQL> set long 90000;

SQL> set pages 999;

SQL> select dbms_metadata.get_ddl('VIEW','DBA_FREE_SPACE') FROM DUAL;

DBMS_METADATA.GET_DDL('VIEW','DBA_FREE_SPACE')

--------------------------------------------------------------------------------

CREATE OR REPLACE FORCE VIEW "SYS"."DBA_FREE_SPACE" ("TABLESPACE_NAME", "FILE_

ID", "BLOCK_ID", "BYTES", "BLOCKS", "RELATIVE_FNO") AS

select ts.name, fi.file#, f.block#,

f.length * ts.blocksize, f.length, f.file#

from sys.ts$ ts, sys.fet$ f, sys.file$ fi

where ts.ts# = f.ts#

and f.ts# = fi.ts#

and f.file# = fi.relfile#

and ts.bitmapped = 0

union all

select

ts.name, fi.file#, f.ktfbfebno,

f.ktfbfeblks * ts.blocksize, f.ktfbfeblks, f.ktfbfefno

from sys.ts$ ts, sys.x$ktfbfe f, sys.file$ fi

where ts.ts# = f.ktfbfetsn

and f.ktfbfetsn = fi.ts#

and f.ktfbfefno = fi.relfile#

and ts.bitmapped <> 0 and ts.online$ in (1,4) and ts.contents$ = 0

union all

select

ts.name, fi.file#, u.ktfbuebno,

u.ktfbueblks * ts.blocksize, u.ktfbueblks, u.ktfbuefno

from sys.recyclebin$ rb, sys.ts$ ts, sys.x$ktfbue u, sys.file$ fi

where ts.ts# = rb.ts#

and rb.ts# = fi.ts#

and u.ktfbuefno = fi.relfile#

and u.ktfbuesegtsn = rb.ts#

and u.ktfbuesegfno = rb.file#

and u.ktfbuesegbno = rb.block#

and ts.bitmapped <> 0 and ts.online$ in (1,4) and ts.contents$ = 0

union all

select ts.name, fi.file#, u.block#,

u.length * ts.blocksize, u.length, u.file#

from sys.ts$ ts, sys.uet$ u, sys.file$ fi, sys.recyclebin$ rb

where ts.ts# = u.ts#

and u.ts# = fi.ts#

and u.segfile# = fi.relfile#

and u.ts# = rb.ts#

and u.segfile# = rb.file#

and u.segblock# = rb.block#

and ts.bitmapped = 0

Right now our database was just built and recyclebin was empty. If there are a lot of rows in the recyclebin, I will not be surprised the performance could be bad again. If it happens, one possible solution is to purge the recyclebin.

3. Another interesting part is that the total number of rows in X$KTFBUE table was about 99 million rows while the total number of extents in the database was also around 99 million rows. So reducing the total number of extent in the database seem a good way to reduce this stats issue. Pay attention to the INITIAL and NEXT in the storage clause. Setting too small will have a large number of extents and create headache in querying fixed tables.

You must be logged in to post a comment.